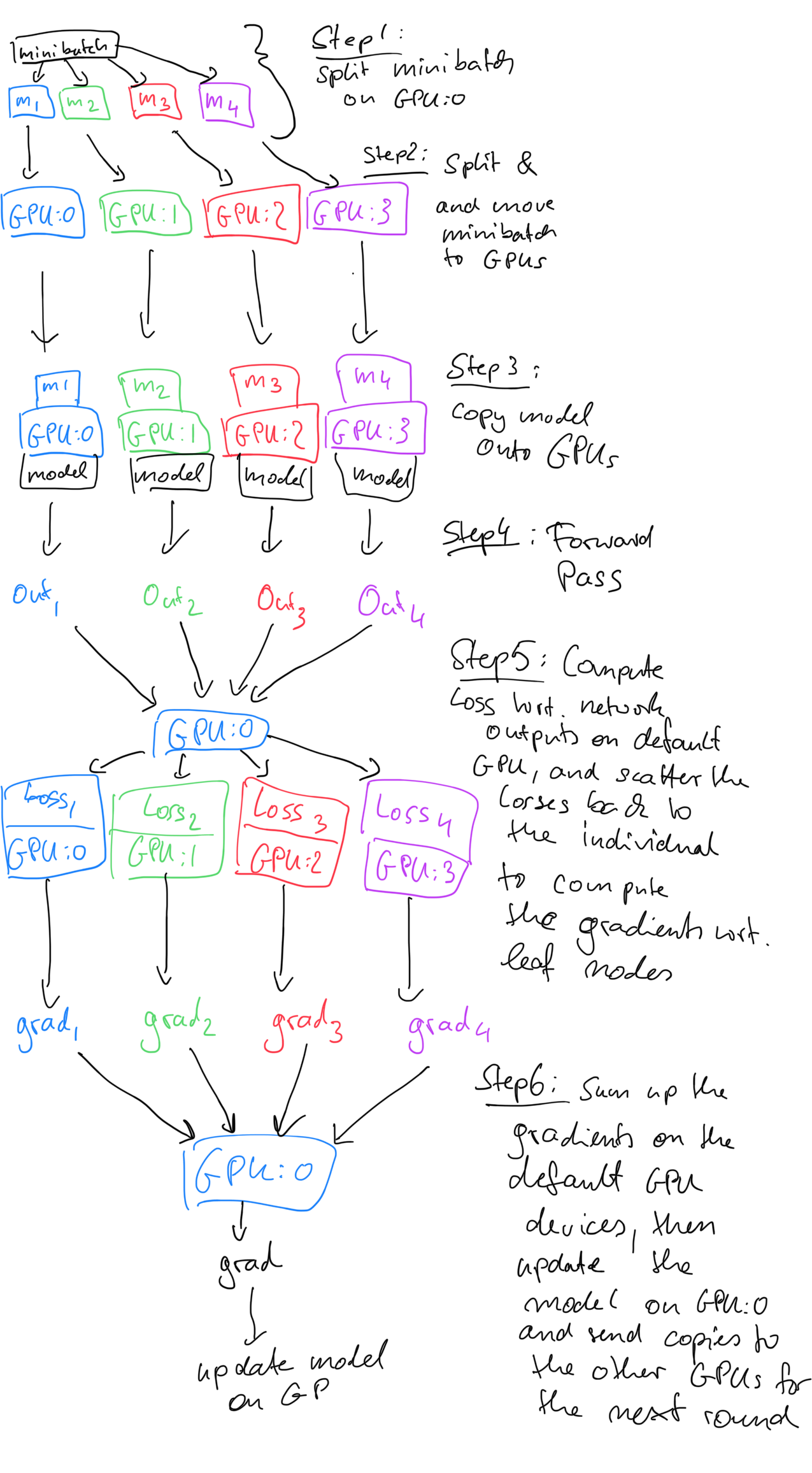

Learn PyTorch Multi-GPU properly. I'm Matthew, a carrot market machine… | by The Black Knight | Medium

Training language model with nn.DataParallel has unbalanced GPU memory usage - fastai users - Deep Learning Course Forums

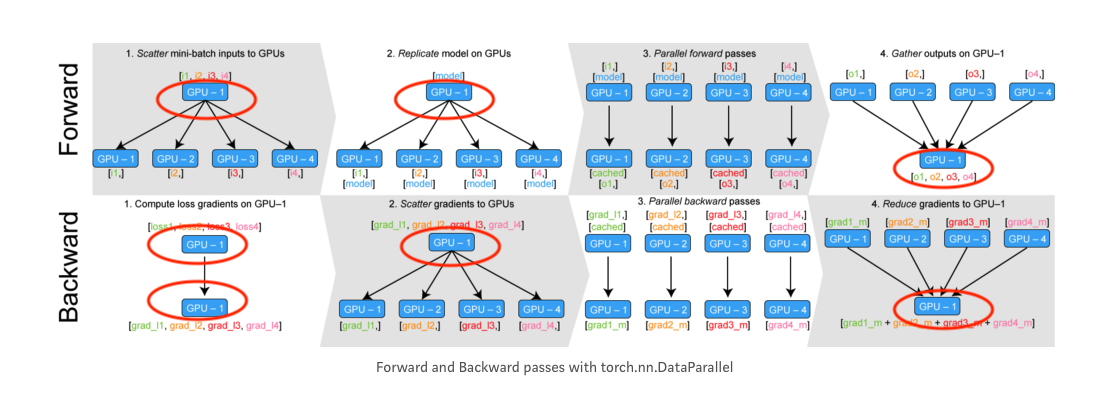

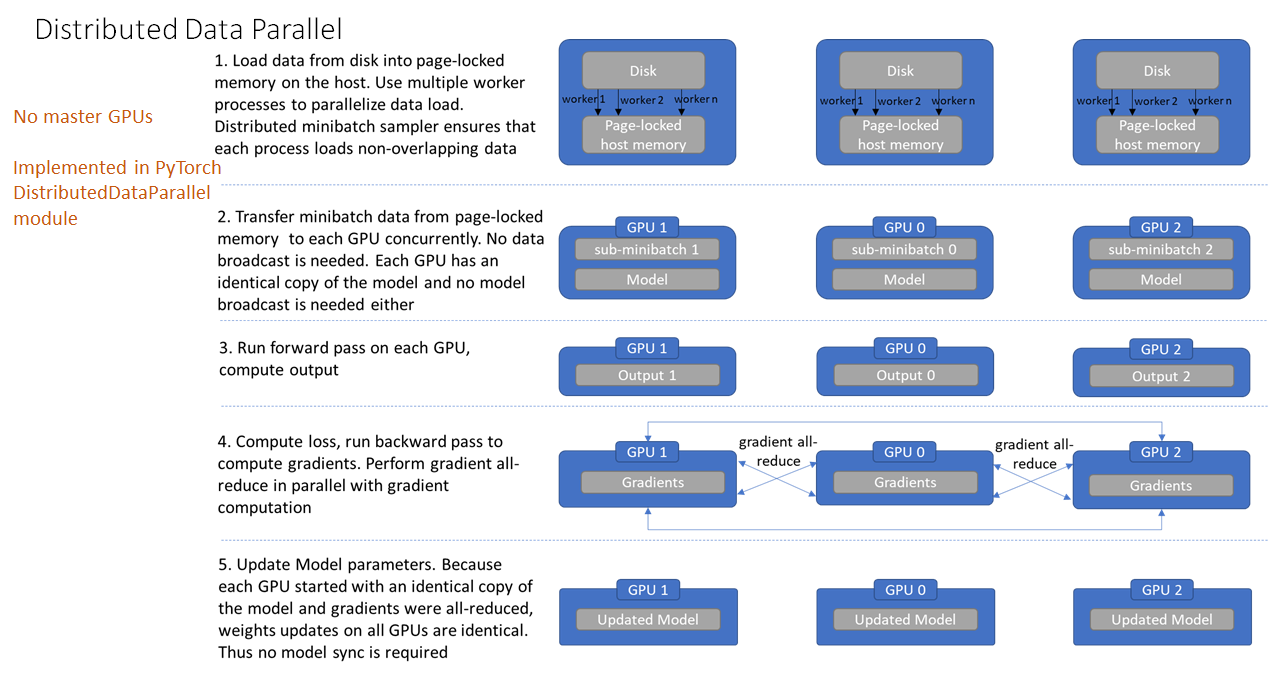

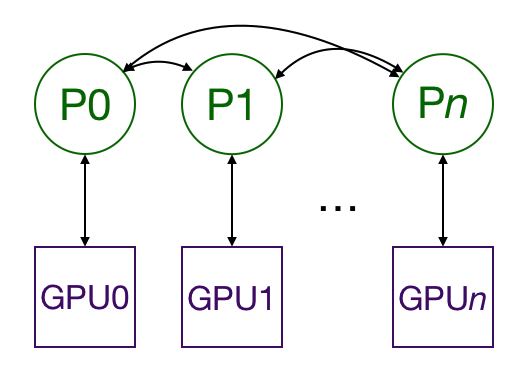

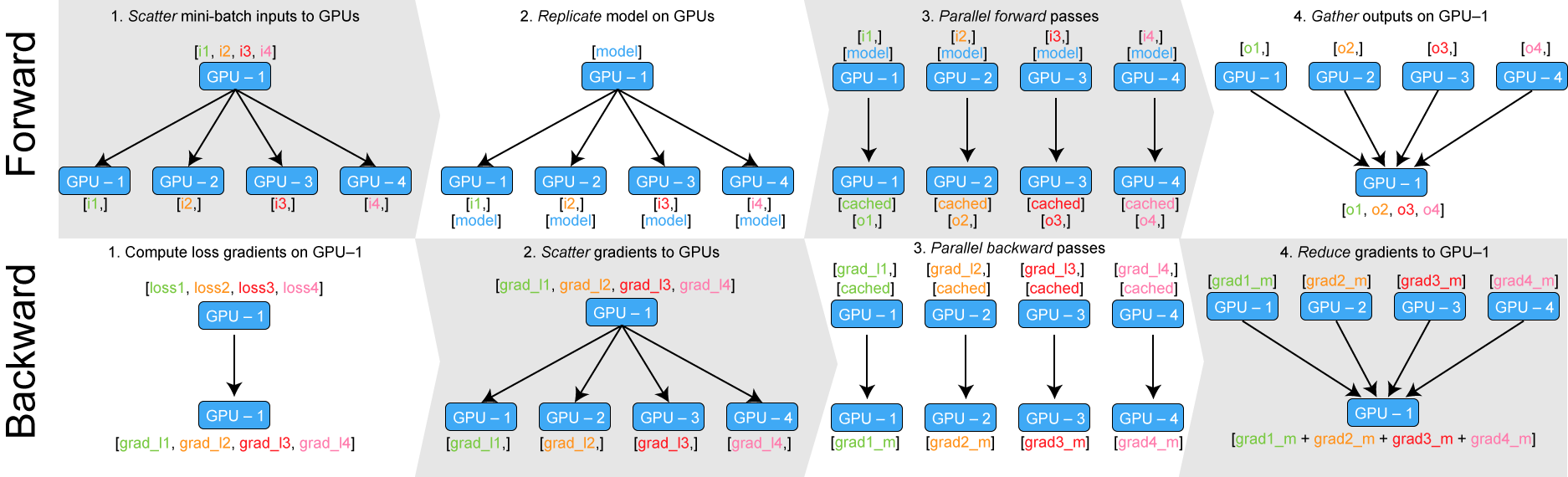

How distributed training works in Pytorch: distributed data-parallel and mixed-precision training | AI Summer